Adaptive Agents for Real-Time RAG: Domain-Specific AI for Legal, Finance & Healthcare

Unleashing Intelligent RAG: How Adaptive Agents and Reflection Elevate Information Retrieval

Retrieval-Augmented Generation (RAG) has revolutionized how Large Language Models (LLMs) access and utilize external knowledge. By fetching relevant information before generating a response, RAG makes LLMs more factual and up-to-date. But what happens when the information sources are constantly changing, or the queries become complex, requiring nuanced understanding and reasoning? Standard RAG systems often hit a wall, struggling with adaptability and static workflows.

Enter Real-time Agentic RAG, a sophisticated evolution of the RAG concept. Utilizing the Pathway framework, we've developed a system that doesn't just retrieve information – it dynamically deploys specialized AI "agents" to tackle queries with unprecedented adaptability and accuracy. This isn't just RAG; it's RAG with a thinking, collaborating team behind it.

Let's dive into how this innovative approach works, the unique challenges it addresses, and why it represents a significant step forward for intelligent information systems.

A Quick 3-Minute Summary– Adaptive Agents for Real-Time RAG System:

Code Repository – Complete Setup & Usage Guide

The Limits of Traditional RAG

Traditional RAG pipelines, while powerful, often operate with a fixed structure: retrieve -> augment -> generate. This works well for static datasets but faces hurdles when dealing with:

- Real-time Data: Real-world knowledge isn't static. Financial markets shift, legal precedents evolve, medical records update. Static RAG struggles to keep pace.

- Complex Queries: Questions requiring information synthesis from multiple document sections or specific, nuanced analysis can overwhelm simple retrieval mechanisms.

- Contextual Nuance: Understanding how a piece of information fits within the larger document context is crucial for relevance, something basic chunking can miss.

- Efficiency and Accuracy: Retrieving too much irrelevant information increases processing load (token usage) and the risk of the LLM generating inaccurate or "hallucinated" responses based on noisy context.

These limitations highlight the need for a more flexible, intelligent, and context-aware RAG system.

Real-time Adaptive Agentic RAG: A Smarter Approach

Our solution reimagines the RAG process by introducing AI agents – specialized entities designed to perform specific tasks within the pipeline. Think of it like assembling a bespoke team of experts for every query. The core innovation lies in its adaptive nature.

Adaptive Agent Formation: Tailored Expertise on Demand

Instead of relying on a fixed set of tools, this system uses the CrewAI framework to generate agents at runtime, specifically tailored to the content of the document being queried.

- How it works: When a document (e.g., a complex contract, a patient's medical history, a financial report) is processed, the system analyzes its structure and content to create agents specializing in different sections or aspects (e.g., a 'Liability Clause Agent' for a contract, a 'Diagnosis History Agent' for medical records).

- Why it matters: This ensures that the agents handling a query possess the most relevant "expertise" for that specific document and potential questions related to it. This dramatically improves adaptability across diverse domains like Healthcare, Legal, and Finance, where document structures and information types vary significantly. It ensures the system is always aligned with the latest data sources

Key Innovations Driving Performance

Beyond dynamic agents, we integrated several cutting-edge techniques, many extending the capabilities of the Pathway data processing framework:

Advanced Contextual Retrieval and Re-ranking

Getting the right information to the agents is paramount. The system employs a sophisticated multi-stage retrieval process:

- Contextual Chunking: Documents are split into chunks, but crucially, each chunk is stored alongside its surrounding context (using a custom Pathway splitter). This helps the system understand the relevance of information more deeply.

- Hybrid Search: It combines dense embeddings (capturing semantic meaning, via Pathway) with sparse embeddings (capturing keyword relevance, using a custom Splade encoder integrated into Pathway). This hybrid approach provides a more nuanced understanding of relevance than either method alone.

- Rank Fusion: Results from dense and sparse searches are combined using a rank fusion formula to produce an initial ranked list of chunks.

- Re-ranking: A transformer-based model then re-evaluates and re-ranks these initial chunks, pushing the most contextually relevant ones to the top and penalizing noise. This ensures high-quality information feeds the generation process.

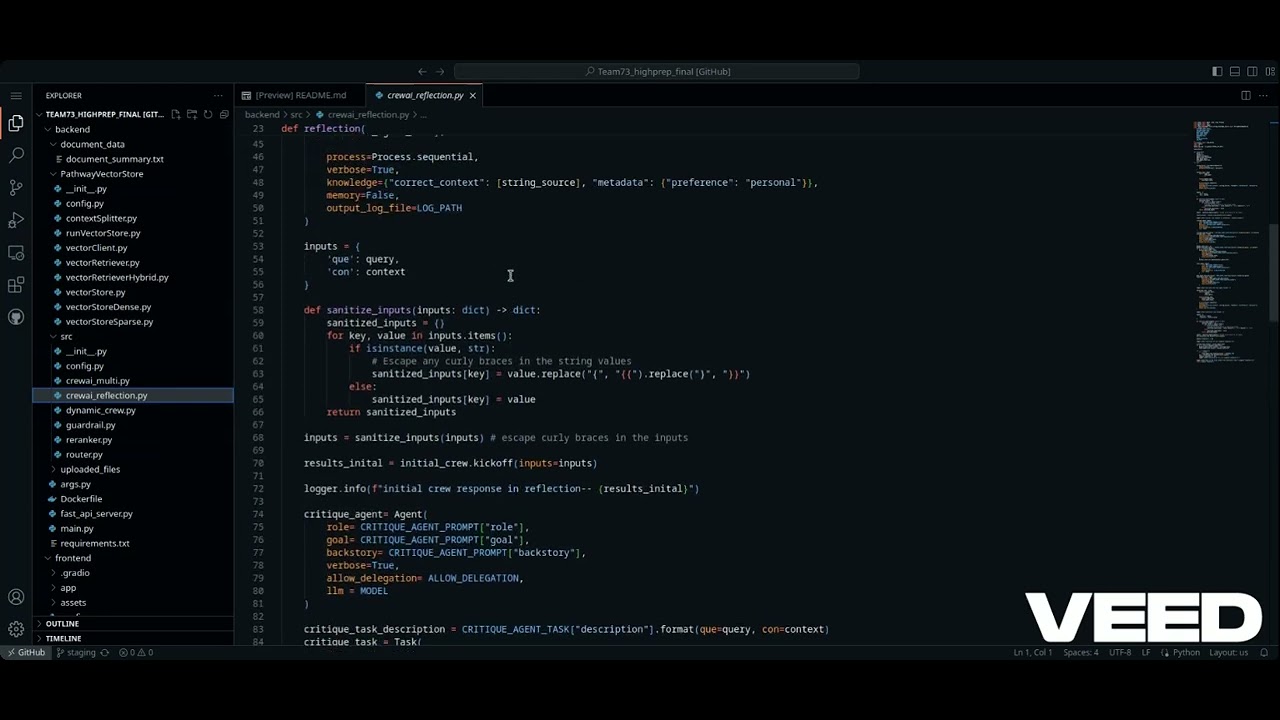

The Reflection Loop: AI Critiquing AI

Inspired by the concept of Self-RAG, this system incorporates a Critique Agent.

- The Process: After the selected agents generate their initial responses based on the retrieved context and the query, the Critique Agent evaluates these responses. It checks for accuracy, relevance, consistency with the source material, and overall coherence.

- Iterative Refinement: The critique agent provides constructive feedback to the other agents. This process repeats for a set number (N) of iterations (defaulting to 2 in our tests). Each loop allows the agents to refine their outputs, leading to progressively better, more accurate, and contextually grounded final answers. This is a powerful mechanism for reducing hallucinations and improving quality.

Intelligent Routing

With potentially many adaptive agents created, how does the system know which ones to engage for a specific query? A dedicated Router Agent steps in. It analyzes the user's query and selects the subset of dynamically generated agents most relevant to answering it. This optimizes resource usage (fewer agents activated) and ensures the response generation process is focused and efficient.

Enhancing the Pathway Framework

We didn't just use Pathway; we extended it. We integrated a Sparse Embedder (Splade), developed a Contextual Retrieval Splitter, and added functionality to store Document Summaries within the Pathway vector store, potentially aiding in agent creation or providing high-level context.

Collaborative Agents with CrewAI

The selected agents, along with a Meta Agent (responsible for synthesizing the final answer) and the Critique Agent, form a "Crew" using the CrewAI framework. This framework facilitates communication and task delegation between agents. They share a common knowledge base (refined query + retrieved context) and can leverage each other's outputs, enabling collaborative problem-solving for complex queries.

Putting It All Together: System Architecture

Visualizing the flow helps understand how these components interact (referencing Figure 4):

- Ingestion: A document (e.g., from Google Drive via the UI) is parsed and processed.

- Embedding & Agent Creation: Contextual chunks are created and embedded (dense & sparse) using the enhanced Pathway pipeline. Simultaneously, adaptive agents tailored to the document are generated via CrewAI.

- Query Processing: A user query arrives, undergoes guardrail checks and refinement.

- Retrieval & Routing: The refined query retrieves the most relevant contextual chunks (using hybrid search, rank fusion, re-ranking). The Router Agent selects the most relevant adaptive agents.

- Agent Crew Execution: The selected agents, Meta Agent, and Critique Agent form a Crew. They access the knowledge base (query + context) and begin processing.

- Reflection: The Critique Agent reviews agent outputs, providing feedback for N iterative refinement cycles.

- Synthesis: The Meta Agent synthesizes the refined responses into a final answer.

- Validation & Delivery: The final response passes through output guardrails before being delivered to the user.

Does It Work? Evaluation Insights

We tested this system, primarily using the CUAD dataset (legal/financial contracts), evaluating different configurations. We measured:

- Semantic Similarity: How closely the generated answer matches the ground truth (using RAGAS).

- Answer Relevancy: How well the answer addresses the prompt, avoiding redundancy (using RAGAS).

- Time of Inference: How long it takes to get an answer.

- Judge LLM: Using another LLM to provide an independent quality score.

Key Findings:

- Reflection Works: Increasing reflection iterations (from n=0 to n=2) consistently improved both Semantic Similarity and Answer Relevancy, demonstrating the effectiveness of the critique loop.

- Contextual Retrieval & Re-ranking Boost Quality: Pipelines using these techniques generally outperformed those without, showing their value in providing better context.

- Trade-offs: There's a clear trade-off between performance and speed. More reflection iterations significantly increase inference time. The best configuration achieved ~90% Semantic Similarity and ~95% Answer Relevancy but took around 45 seconds.

These results validate the core concepts, showing measurable improvements in response quality at the cost of increased computation.

Tackling the Hurdles: Challenges and Solutions

Developing such a sophisticated system isn't without challenges:

- Reliability & Safety: Ensuring accurate, non-toxic outputs. Addressed by input/output Guardrails and the Critique Agent's refinement.

- Hallucinations (Multi-Agent Cascading): One agent's error could mislead others. The Reflection Loop is key to catching and correcting these before they propagate.

- Complexity & Efficiency: Managing multiple agents and complex retrieval adds overhead. Adaptive Agent Formation and Routing help by activating only necessary agents. Contextual Retrieval optimizes the information quality/quantity trade-off.

- Evaluation: Lack of standard metrics for agentic RAG. Addressed by using a combination of metrics like Semantic Similarity, Answer Relevancy, and a Judge LLM.

When Things Go Wrong: Robust Error Handling

What if the system can't find a good answer, or the user isn't satisfied? We implemented a fallback mechanism. If the pipeline encounters an error or the user flags the response, it can trigger a web search using APIs like JinaAI (with Exa AI as a backup), providing an alternative route to information.

Interacting with the System: The User Interface

Accessibility is key. We built a Gradio-based UI allowing users to:

- Input a Google Drive folder URL and credentials.

- Submit queries.

- See the "thinking process" of the agents streamed in real-time.

- Access the web search fallback.

This interface, styled with a nod to the Pathway framework, makes the complex backend processes accessible and transparent.

Building Trust: Responsible AI Practices

We emphasize responsible AI throughout the pipeline:

- Query Intake Validation: Guardrails check incoming queries for safety and appropriateness.

- Response Safeguarding: Guardrails validate the final output for contextual accuracy, ethical alignment, and relevance, filtering out toxic or misleading content.

- Agent Oversight: The Meta-Agent synthesizes information, and the Critique Agent acts as a guardrail through iterative review, enhancing reliability.

Lessons Learned and the Road Ahead

Building this system offered valuable insights:

- Iterative Refinement is Powerful: Loops within agent interactions (like the reflection cycle) significantly improve outcome quality by allowing for debate, correction, and deeper task exploration.

- Adaptability is Key: Adaptive agent formation proved crucial for handling diverse data and use cases effectively.

The future could involve further optimizing agent communication, exploring more sophisticated memory mechanisms for agents, reducing latency, and expanding the range of supported data sources and use cases.

Conclusion: The Dawn of Smarter RAG

Our Real-time Agentic RAG approach with Pathway marks a significant advancement beyond traditional RAG. By incorporating adaptive agent formation, advanced contextual retrieval, and a powerful reflection mechanism, we've created a system that is more adaptable, accurate, and robust, particularly when dealing with complex queries and evolving information landscapes.

While challenges like computational cost remain, this approach demonstrates the immense potential of agentic AI to create truly intelligent information retrieval systems. It paves the way for applications that can understand context, reason dynamically, and deliver trustworthy insights from complex data – moving us closer to AI that doesn't just retrieve, but truly understands.

Frequently Asked Questions (FAQ)

Q1: What is the main difference between this and standard RAG?

A1: The key differences are adaptive agent generation (agents tailored to the document at runtime), the use of a critique agent for iterative reflection/improvement, and advanced contextual retrieval with hybrid search and re-ranking. Standard RAG is typically more static.

Q2: What is Pathway and CrewAI's role?

A2: Pathway is used as the underlying data processing framework, handling tasks like chunking, embedding (both dense and custom sparse), and vector storage. CrewAI is used to orchestrate the AI agents, enabling their creation, task assignment, and communication within the "crew."

Q3: How does the "Reflection" part work?

A3: A dedicated "Critique Agent" examines the responses generated by other agents based on the query and retrieved context. It provides feedback, and the agents revise their responses. This loop repeats a few times to improve accuracy and relevance.

Q4: Is this system much slower than normal RAG?

A4: Yes, the added complexity, especially the reflection loops, increases the time it takes to get an answer (inference time), as shown in the evaluations. There's a trade-off between response quality and speed.

Q5: Can this handle any type of document?

A5: The adaptive agent formation makes it highly adaptable to any domain. The paper mentions successful application to legal and financial documents (CUAD dataset) and potential for healthcare records. Its suitability would depend on the ability to parse the document and generate meaningful specialized agents.

If you are interested in diving deeper into the topic, here are some good references to get started with Pathway:

- Pathway Developer Documentation

- Pathway App Templates

- Discord Community of Pathway

- Power and Deploy RAG Agent Tools with Pathway

- End-to-end Real-time RAG app with Pathway

For more information for our concepts and frameworks which we have used, these are a good place to start with:

- CrewAI Quickstart

- RAGAS Evaluation Metrics

- Jina AI Reader API

- Exa AI Web Search API

- Self-RAG concept (arXiv Paper)

Authors

Pathway Community

Multiple authors